Getting the Model

Method 1: Applying for Access on HuggingFace

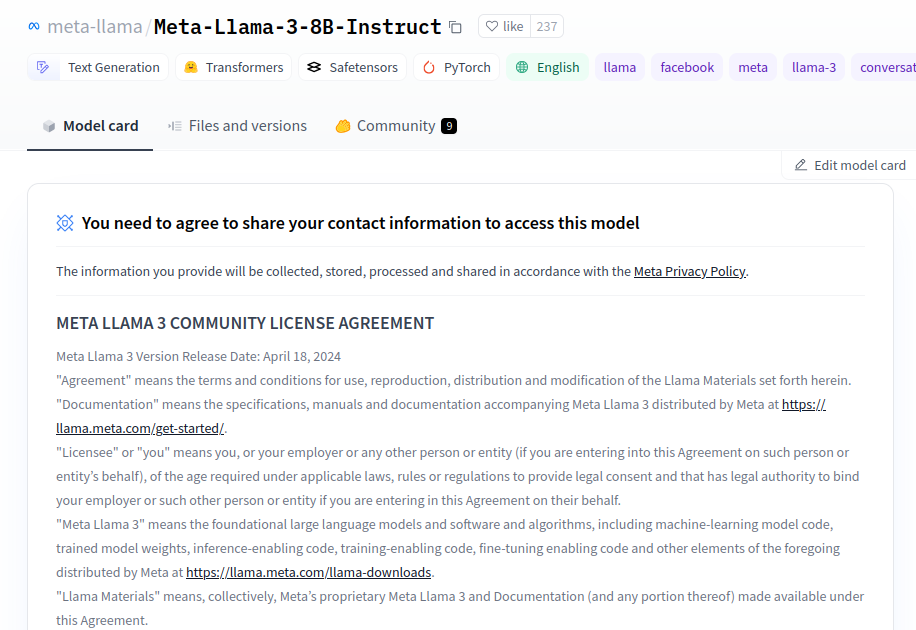

- Log in to HuggingFace and go to https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct to apply for access to the meta-llama/Meta-Llama-3-8B-Instruct model (approximately 1 hour for review)

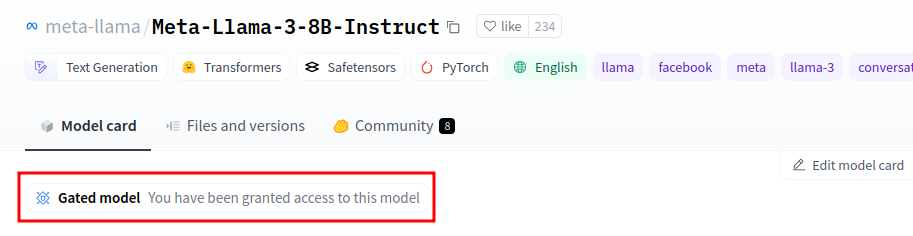

- If you see the "You have been granted access to this model" message, it means you have obtained the model access, and you can download the model

-

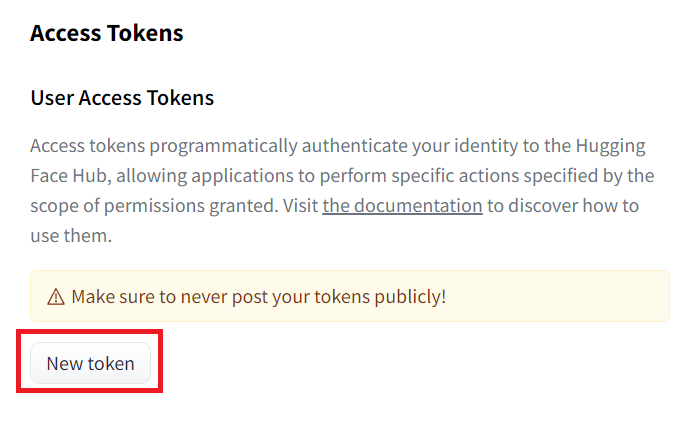

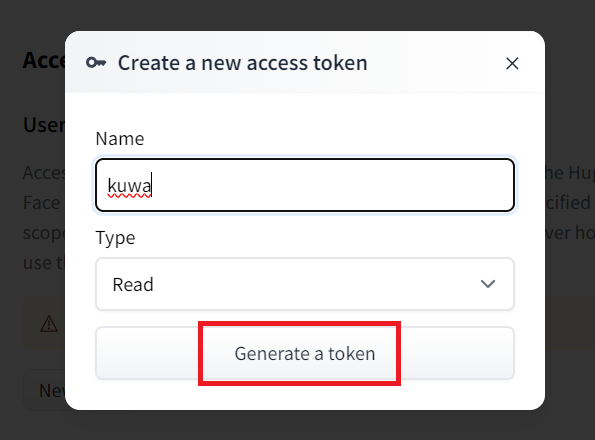

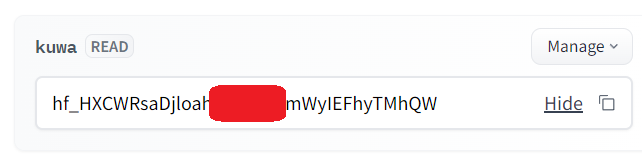

If you need to use a model that requires login, you need to set up the HuggingFace Token. If you are using a model that does not require login, you can skip this step Go to https://huggingface.co/settings/tokens?new_token=true

Enter your desired name

Then, keep this token safe (do not share it with anyone)

-

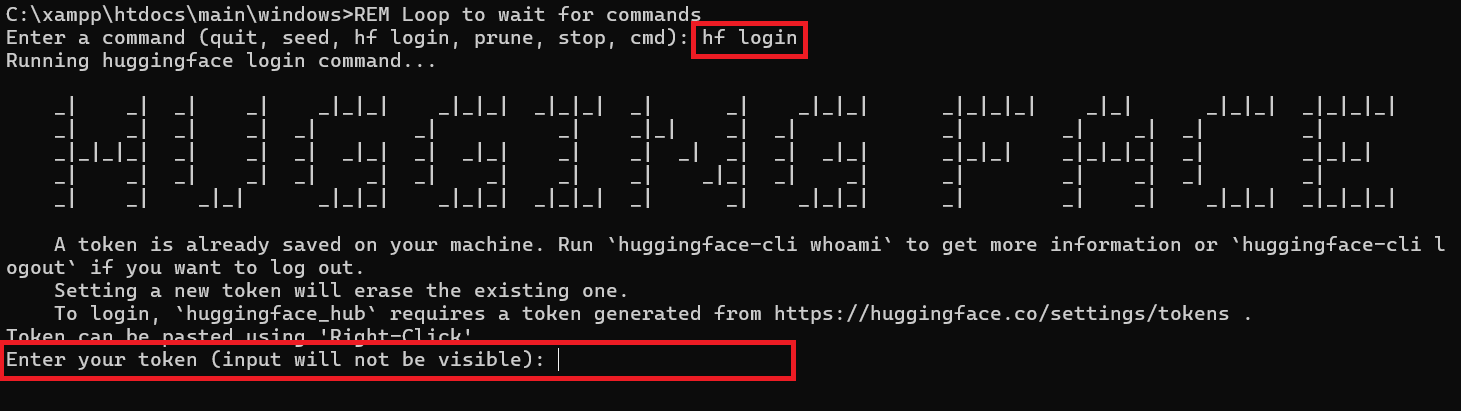

Next, go to the project directory's

kuwa\GenAI OS\windowsfolder and executetool.bat

Enter the HF Token you just generated, and you can use the mouse right-click to paste it, but this input is invisible, so enter it and press Enter

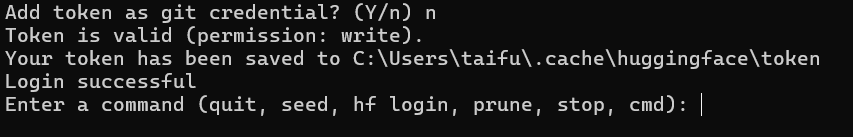

Enternfor the Git certificate part

After that, you will see "Login successful" to indicate that the setting is successful.

Method 2: Direct Download from HuggingFace without Login

- If you don't want to log in to HuggingFace, you can find a third-party re-uploaded model (named Meta-Llama-3-8B-Instruct, without GGUF):

Search on HuggingFace: https://huggingface.co/models?search=Meta-Llama-3-8B-Instruct

For example,NousResearch/Meta-Llama-3-8B-Instruct, remember the name

Setting up Kuwa

-

Go to the

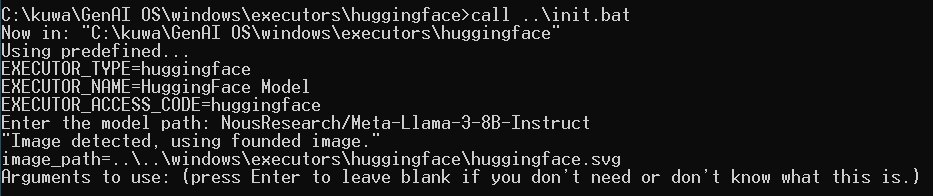

kuwa\GenAI OS\windows\executorsfolder, which should have ahuggingfacesubfolder by default, enter it, and openinit.bat

You need to enter the model path, which can be the location on HuggingFace, such as:

Method 1:meta-llama/Meta-Llama-3-8B-Instruct

Method 2:NousResearch/Meta-Llama-3-8B-Instruct

- The image part will automatically find the image in the folder

- Arguments to use:

"--no_system_prompt" "--stop" "<|eot_id|>"; if you want to customize parameters, please refer to the README file in theexecutorfolder - This will automatically create a

run.batfile in thekuwa\GenAI OS\windows\executors\huggingfacefolder

-

Go back to the project directory's

kuwa\GenAI OS\windowsfolder and executestart.batto automatically download and start the model. -

Note: The downloaded models will be stored in the

.cache\huggingface\hubfolder in the user directory, and if the space is insufficient, please clean up the model cache.

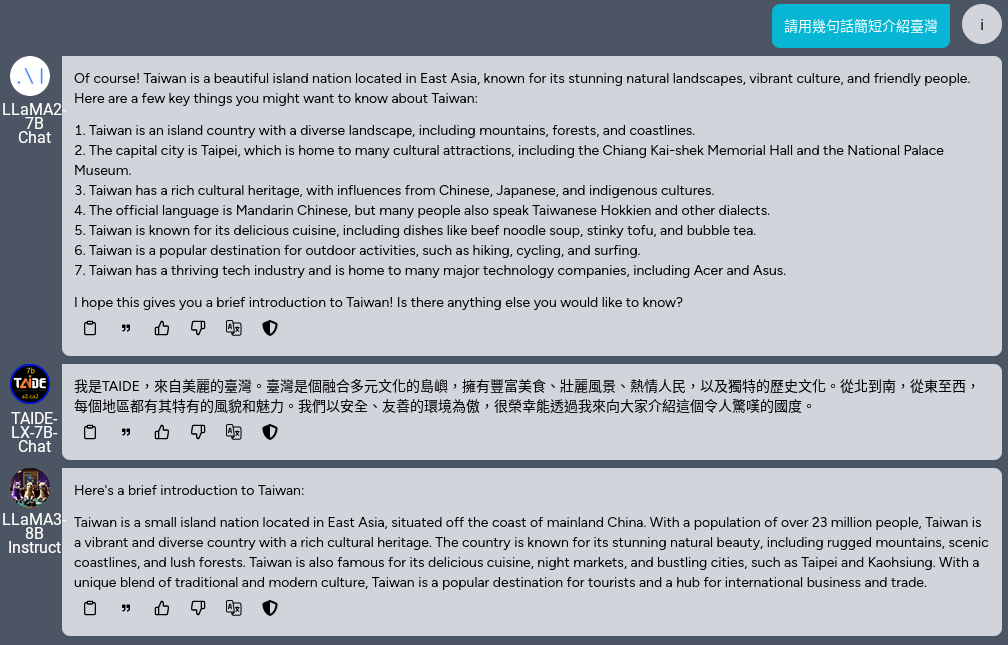

Using Kuwa

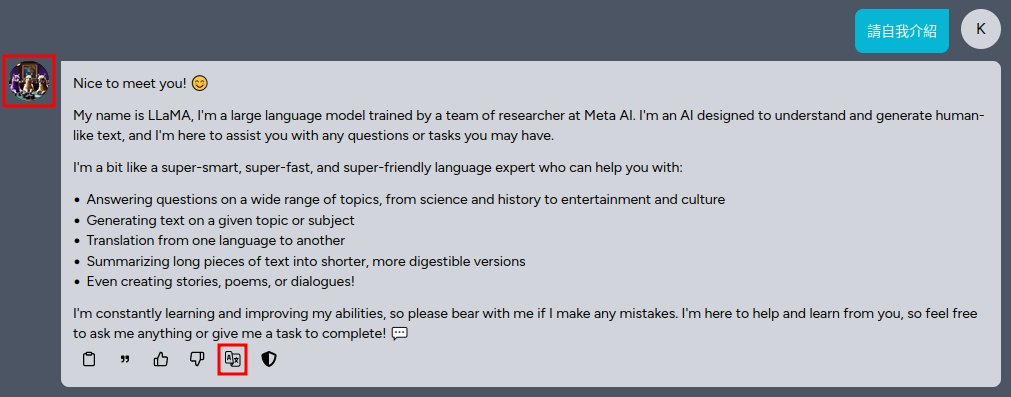

- Wait for the model to download and then log in to Kuwa to start chatting with Llama3

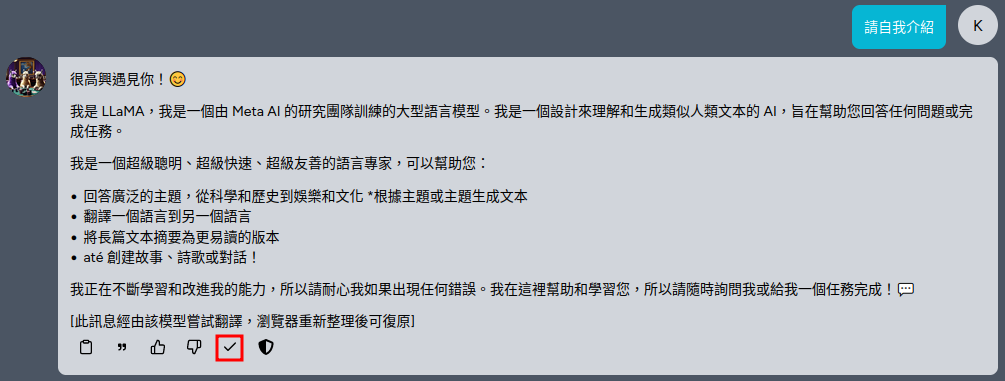

- Llama3 is set to prefer English, and you can use the "Translate this model's response" function to translate the model's response into Chinese

- You can use the group chat function to compare the responses of Llama3, Llama2, and TAIDE-LX-7B-Chat